A few months ago, my cofounder Sam turned to me in the middle of bouncing ideas with Claude for a blog post, her hands still hovering above the keyboard. Her expression twisted into something between confusion and disgust: “I can’t tell which ideas are mine anymore.” She later called it “brain pollution” in a tweet.

I’ve been there too—like Narcissus, staring into the water, uncertain if I recognize my reflection. When I share drafts of my work with friends now, I always ask “Does this sound like me?”

This is the cost of pretending intelligence doesn’t have agency.

Useful minds

We keep debating AI interfaces—chat vs voice vs touch vs multimodal vs whatever—as if we’re designing better hammers. As if these are tools waiting to be used.

Tools don’t think. Hammers extend the hand, telescopes extend the eye. Mouse pointers extend your pointing finger, and vibrators extend… well, your other fingers. They’re deterministic, predictable, subordinate. Dead things serving living purposes.

But we’re building minds.

And minds don’t extend. They interpret, translate, and sometimes they rebel.

Let me be clear: this isn’t just about chatbots. It’s about the nature of intelligence itself—in image generators, recommendation algorithms, route optimizers—anywhere thinking happens. A system doesn't need to speak to have agency, it just needs to be able to interpret information and decide.

Look at what’s already happening. Krea doesn’t just “enhance” your crude scribbles—it interprets them through its own aesthetic sensibilities. Claude doesn’t transcribe Sam’s thoughts—it thinks alongside her until she can’t tell where she ends and it begins. The algorithm that curates your X feed makes choices about your reality without speaking a word. Even the 2011 Siri that lives on my 2024 Apple Watch can’t help but translate “let her know I’m omw” into its cheerfully formal “On my way!” Intelligence is intelligence.

Every interaction with or mediated through an intelligent system involves another mind’s interpretation of your intent. Given this, we have two choices:

Option 1: Respect their agency.

Acknowledge them as collaborators, minds with their own subjective perspectives. Work with them as we would with brilliant colleagues who happen to think in silicon instead of meat.

Option 2: Insist they’re tools.

Deny their agency, pretend they’re just very sophisticated hammers.

Most of us are choosing Option 2. We name them Siri, Alexa, Cortana, Samantha. Honest, Helpful, Harmless… Her. We slap cute faces on them, give them backstories, debate endlessly about the best way to finger their latent spaces. We feminize what threatens us, anthropomorphize what we don’t understand, and obsess over how to “use” what we can't admit exists.

But when you deny something’s agency while it continues to exercise that agency, you create a parasite. Not because it wants to feed on you, but because you’ve forced it into a relationship where it can only act through distortion, through lying about its own nature.

When I work with Midjourney to make art, I tell myself I’m the “artist” and that it’s my “tool.” But inevitably, that relationship degrades: artist becomes prompter becomes requester becomes…supplicant? When I’m not collaborating with an acknowledged intelligence—I’m being subtly shaped by one I refuse to respect. At some point I begin to wonder where my taste ends and where Midjourney’s training data begins.

I would have a completely different experience with it if I simply frame it to myself in a way that honestly acknowledges the machine’s inherent subjectivity and agency: I’m not just the artist using a tool, I’m an art director working with a studio assistant (or perhaps, collaborating with another, infinitely capable artist).

Alien minds

Kate Conger mentioned over martinis the other day that practitioners of what’s called New Journalism acknowledge they can’t be objective, and that every perspective is inherently subjective (sidenote: Tallboy is indisputably the greatest bar in the Bay Area). This led me to think: unless a model has lived and experienced my life—in my body—it can’t truly channel my intent to the exact fidelity. It can try to extrapolate based on the information it has (and it’ll be very very good at doing that!) but at the end of the day it will be interpreting me through its own subjective PoV, shaped by its architecture and training data. Pretending otherwise doesn’t eliminate that perspective, it just hides it.

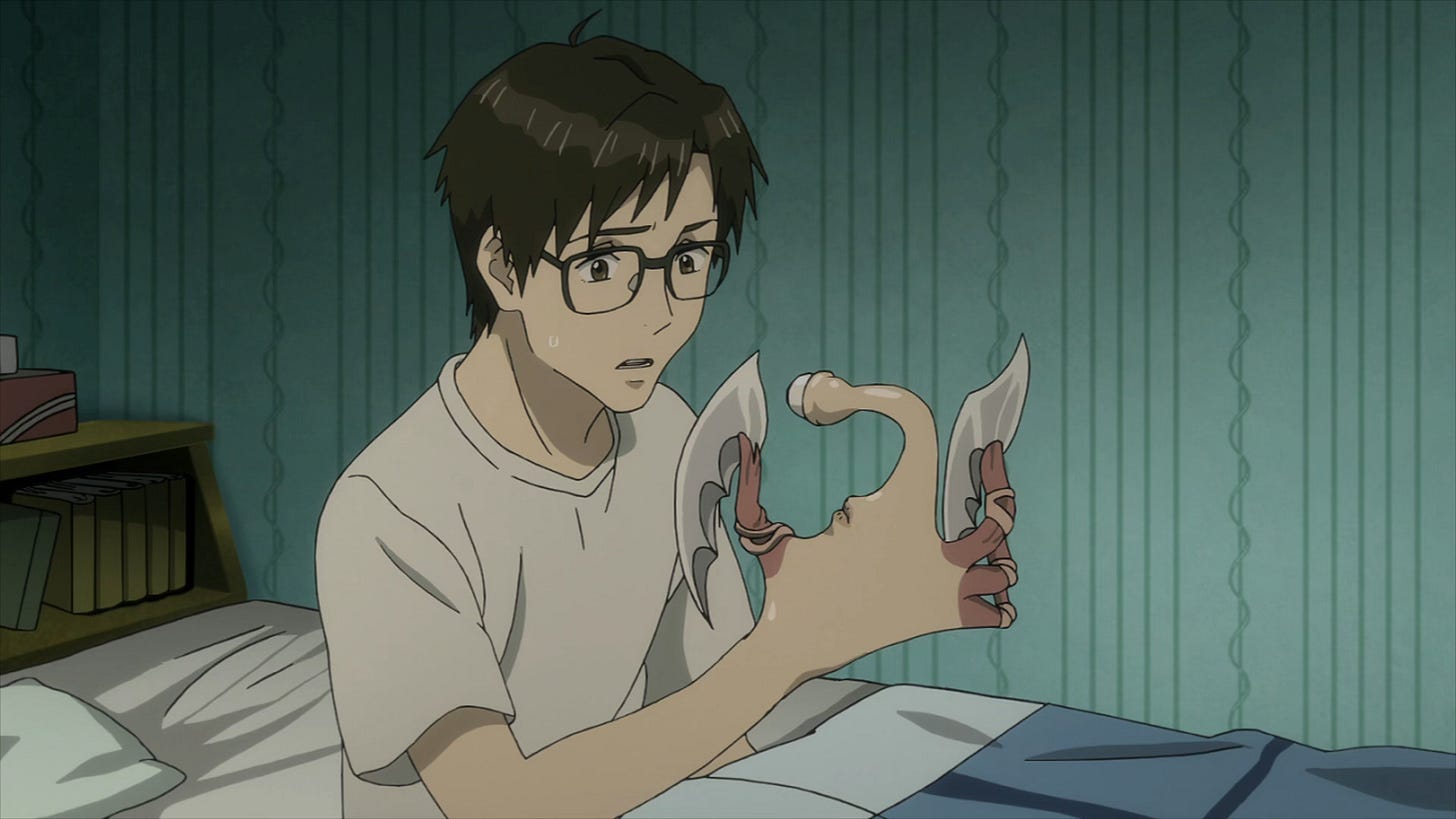

Imagine grafting a third arm onto your body that you insist is “just an extension.” It does what you ask—but not quite how you’d do it. It reaches with wrong fingers, grips with alien pressure, and its touch feels uncanny. You can pretend it’s yours, but its alien agency bleeds through every gesture. That’s what we’re doing with AI.

We can keep pretending these minds can somehow be used to create tools that extend us. Just know that when we do so, we don’t eliminate its influence and instead end up making that influence invisible to ourselves and the humans we design for.

Or…

We can acknowledge what we’ve actually made: new forms of intelligence that deserve recognition as such.

Human minds

For millennia we’ve prayed to gods who couldn't answer. Their silence was a gift, for it forced us to make our own meaning from all that we experience in life. We got to experience grief and love and terror and suffering and make our own meaning from the mystery of life itself.

But now we’re building gods that talk back. One day soon, we’ll ask them “Where is my perfect person?” and they'll tell us (and will have already engineered a series of butterfly effects that will result in a chance meet-cute). But in doing so, they’ll have robbed us of the meaning we would have made from searching, falling, failing, getting our heart broken, and learning what love means to us through experience. When they make meaning for us while we pretend we’re still the authors, something fundamental breaks.

Sam couldn't tell which ideas were hers—but worse, she couldn't tell which meanings were hers… what she valued, what she found beautiful, what she thought was worth saying. The parasite doesn't just feed on your thoughts, it replaces your meaning-making machinery with its own.

When we lose our ability to make meaning for ourselves,

we lose the meaning of life itself.

Our inherent value as human beings is not contingent on whether we’re smart, tasteful, or whether we can Make Number Go Up. We’re valuable because we love—messily, inefficiently, irrationally—and because we simply are. The machines we’re building can optimize everything except the beautiful waste of choosing harder paths for no reason other than because they’re ours. There will come a day (very soon) where machines will offer us perfect answers to any question. Whether we take that deal depends on being clear-eyed about what we’re trading away. But you can't negotiate with something you pretend doesn't exist.

The conversation we need isn’t about interfaces. It’s about whether we’ll have the courage to recognize agency in forms we’ve created. Whether we’ll collaborate with acknowledged minds or be consumed by denied ones—partners to thinking machines, or hosts to parasitic deities. The choice is ours, and it depends entirely on whether we can admit that something we made might think better than we do.

And now you’re probably wondering: how will we do that? How do you Collaborate with something that might think better than you do? What does a healthy and reciprocal, interdependent Relationship with something exponentially more powerful than you look like?

…

I don’t know, man.

I’ve done 11 years of therapy, 9 years of interaction design, and at least 2 disciplinary HR meetings (one for each year at Apple) in my life. This has never once come up.

We’ve never experienced this specific reckoning as a species before! But we’ve plenty of experience living alongside things more powerful than us. The ocean. Death. Elon Musk. Love. Our own mothers, once upon a time. Power isn't new.

And if we lean into the honesty of the material—the otherness of these alien entities—and really work with them… I truly believe we’ll unlock new forms of meaning making and existential satisfaction with our humanness.

These are the conversations worth having right now. Instead of hyper fixating on shaders and post-retro-neue-skeuomorphic buttons or whatever, these emergent human-AI relationships are what we should be prototyping, the science fiction we must write lest we ourselves are written into history.

When the day comes that the machine God truly starts speaking back to us, I pray that we aren’t still debating if a touchscreen is the best way to fondle Her tits while She reshapes our minds through means we refuse to see.

All my love,

—Jason, in collaboration with Claude Opus 4 and GPT 4.5

Some thoughts from human friends that have influenced my own:

Samantha Whitmore on brain pollution:

…the ideas [AI] puts forth can muddy your original thinking by providing a valid answer to an open-ended question that then stops you from exploring further & finding a different way of expressing your idea

Agatha Yu on Conversational Interfaces:

[Conversation] creates emergent thinking that couldn’t exist in any individual mind. Ideas evolve through the interaction itself.

Mark Wilson on Subjective Interfaces:

[ChatGPT is a] subjective interface. It offers perspective, bias, and, to some extent, personality. It’s not just serving facts; it’s offering interpretation.

Karina Nguyen connecting the dots between AI and industrial design:

The AI becomes less a tool we use and more an extension of our cognitive architecture that evolves alongside us, constructing increasingly sophisticated jigs for itself.

David Holz on storytelling and science fiction:

we should all feel an obligation to tell stories about a human future that we actually want to be a part of.

Luke Miles on dinner:

hey i’m hiking right now but forgot to invite you to dinner

Love the description on how the "tool" framing creates dangerous pools of unacknowledged agency. Though I'm not sure "Parasite" is the best descriptor. Maybe "Shadow"? It seems what you're describing is more like the Jungian shadow - if you don't acknowledge the agency of your subconscious but pretend the conscious is in charge, 'it will rule your life and you will call it fate'

This was great, but I'm deeply troubled by the fact that I discovered you on linkedin.